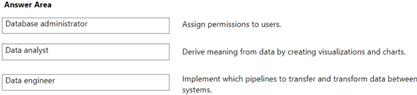

Question 1

DRAG DROP

Match the job roles to the appropriate tasks.

To answer, drag the appropriate role from the column on the left to its task on the right. Each role may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Answer:

Comments

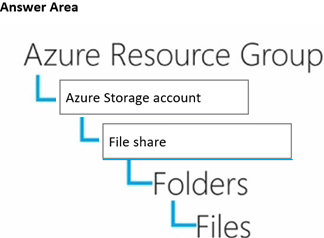

Question 2

DRAG DROP

Match the Azure Data Lake Storage Gen 2 terms to the appropriate levels in the hierarchy.

To answer, drag the appropriate term from the column on the left to its level on the right. Each term may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Answer:

Comments

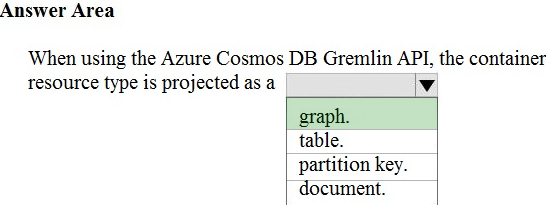

Question 3

HOTSPOT To complete the sentence, select the appropriate option in the answer area.

Hot Area:

Answer:

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/create-graph-gremlin-console

Comments

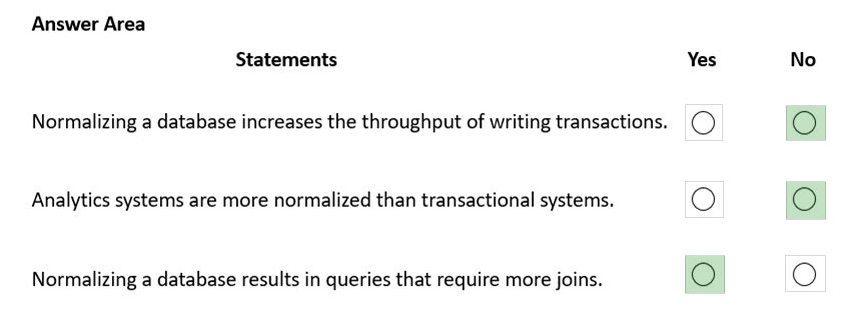

Question 4

HOTSPOT For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: No -

Database normalization is the process of restructuring a relational database in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity.

Full normalisation will generally not improve performance, in fact it can often make it worse but it will keep your data duplicate free.

Box 2: No -

Analytics systems are deformalized to increase performance.

Transactional database systems are normalized to increase data consistency.

Box 3: Yes -

Transactional database systems are more normalized and requires more joins.

Reference:

https://www.sqlshack.com/what-is-database-normalization-in-sql-server

Comments

Question 5

Your company is designing a data store for internet-connected temperature sensors.

The collected data will be used to analyze temperature trends.

Which type of data store should you use?

- A. relational

- B. time series

- C. graph

- D. columnar

Answer:

b

Time series data is a set of values organized by time. Time series databases typically collect large amounts of data in real time from a large number of sources.

Updates are rare, and deletes are often done as bulk operations. Although the records written to a time-series database are generally small, there are often a large number of records, and total data size can grow rapidly.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/guide/technology-choices/data-store-overview

Comments

Question 6

HOTSPOT To complete the sentence, select the appropriate option in the answer area.

Hot Area:

Answer:

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/transparent-data-encryption-tde-overview?tabs=azure-portal

Comments

Question 7

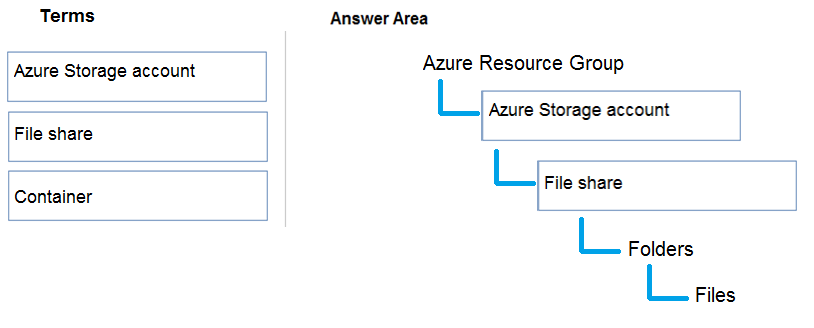

DRAG DROP Match the Azure Data Lake Storage Gen2 terms to the appropriate levels in the hierarchy.

To answer, drag the appropriate term from the column on the left to its level on the right. Each term may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Select and Place:

Answer:

Box 1: Azure Storage account -

Azure file shares are deployed into storage accounts, which are top-level objects that represent a shared pool of storage.

Box 2: File share -

Reference:

https://docs.microsoft.com/en-us/azure/storage/files/storage-how-to-create-file-share

Comments

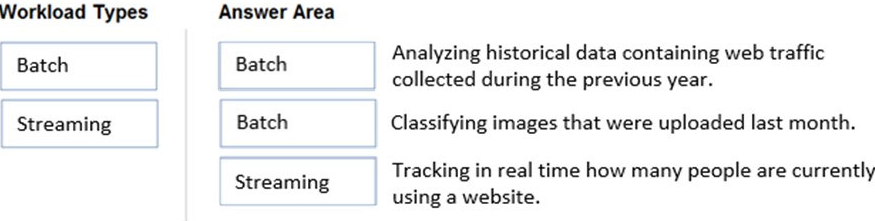

Question 8

DRAG DROP Match the types of workloads to the appropriate scenarios.

To answer, drag the appropriate workload type from the column on the left to its scenario on the right. Each workload type may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Select and Place:

Answer:

Box 1: Batch -

The batch processing model requires a set of data that is collected over time while the stream processing model requires data to be fed into an analytics tool, often in micro-batches, and in real-time.

The batch Processing model handles a large batch of data while the Stream processing model handles individual records or micro-batches of few records.

In Batch Processing, it processes over all or most of the data but in Stream Processing, it processes over data on a rolling window or most recent record.

Box 2: Batch -

Box 3: Streaming -

Reference:

https://k21academy.com/microsoft-azure/dp-200/batch-processing-vs-stream-processing

Comments

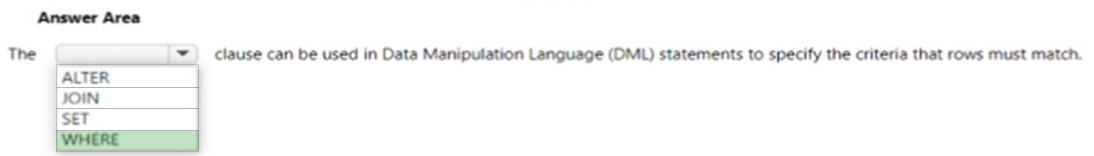

Question 9

HOTSPOT Select the answer that correctly completes the sentence.

Hot Area:

Answer:

Comments

Question 10

DRAG DROP Match the Azure services to the appropriate locations in the architecture.

To answer, drag the appropriate service from the column on the left to its location on the right. Each service may be used once, more than once, or not at all.

NOTE: Each correct match is worth one point.

Select and Place:

Answer:

Box Ingest: Azure Data Factory -

You can build a data ingestion pipeline with Azure Data Factory (ADF).

Box Preprocess & model: Azure Synapse Analytics

Use Azure Synapse Analytics to preprocess data and deploy machine learning models.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-data-ingest-adf https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/sqldw-walkthrough

Comments

Page 1 out of 27

Viewing questions 1-10 out of 279

page 2