microsoft pl-500 practice test

microsoft power automate rpa developer

Last exam update: Apr 17 ,2025

Question 1

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

City Power and Light is one of the biggest energy companies in North America. They extract, produce and transport oil. The company has more than 50 offices and 100 oil extraction facilities throughout the United States, Canada, and Mexico. They use railways, trucks, and pipelines to move oil and gas from their facilities.

The company provides the following services:

Produce oil from oil sands safely, responsibly, and reliably.

Refine crude oil into high-quality products.

Develop and manage wind power facilities.

Transport oil to different countries/regions.

City Power and Light uses various Microsoft software products to manage its daily actives and run its machine-critical applications.

Requirements

ManagePipelineMaintenanceTasks A user named Admin1 creates a cloud flow named ManagePipelineMaintenanceTasks. Admin1 applies a data loss prevention (DLP) policy to the flow. Admin1 shares the flow with a user named PipelineManager1 as co-owner. You must determine the actions that PipelineManager1 can perform.

MaintenanceScheduler You create a cloud flow that uses a desktop flow. The desktop flow connects to third-party services to fetch information. You must not permit the desktop flow to run for more than 20 minutes.

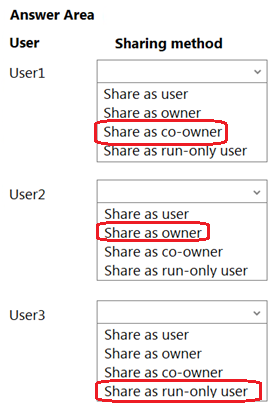

You must configure sharing for MaintenanceScheduler to meet the following requirements:

User1 must be able to work with you to modify the desktop flow.

User2 must be able to access and review the run history for the cloud flow.

You must grant User3 permissions to run but not modify the desktop flow.

ERPDataOperations flow City Power and light uses an enterprise resource planning (ERP) system. The ERP system does not have an API.

Each day the company receives an email that contains an attachment. The attachment lists orders from the companys rail transportation partners. You must create an automation solution that reads the contents of the email and writes records to the ERP system. The solution must pass credential from a cloud flow to a desktop flow.

RailStatusUpdater City Power and Light actively monitors all products in transit. You must create a flow named RailStatusUpdater that manages communications with railways that transport the companys products. RailStatusUpdater includes five desktop flow actions.

You must run the desktop flows in attended mode during testing. You must run the desktop flows in unattended mode after you deploy the solution. You must minimize administrative efforts.

Packaging All flow automations must be created in a solution. All required components to support the flows must be included in the solution.

Issues

ProductionMonitor flow You create a cloud flow named ProductionMonitor which uses the Manually trigger a flow trigger. You plan to trigger ProductionMonitor from a cloud flow named ProdManager.

You add a Run a Child flow action in ProdManager to trigger ProductionMonitor. When you attempt to save ProdManager the following error message displays:

Request to XRM API failed with error: 'Message:Flow client error returned with status code Bad request and details (error:

{code:ChildFlowUnsupportedForinvokerConnections, message: The workflow with id 8d3bcde7-7e98-eb11-b1ac-000d3a32d53f, named FlowA cannot be used as a child workflow because child workflows only support embedded connections. }}Code 0x80060467 InnerError.'

CapacityPlanning flow Developers within the company use cloud flows to access data from an on-premises capacity planning system.

You observe significant increases to the volume of traffic that the on-premises data gateway processes each day. You must minimize gateway failures.

DataCollector flow You have a desktop flow that interacts with a web form. The flow must write data to several fields on the form.

You are testing the flow. The flow fails when attempting to write data to any field on the web form.

RailStatusUpdater flow The RailStatusUpdater flow occasionally fails due to machine connection errors. You can usually get the desktop flow to complete by resubmitting the cloud flow run. You must automate the retry process to ensure that you do not need to manually resubmit the cloud flow when machine connection errors occur.

You need to resolve the issue reported with the RailStatusUpdater flow.

What are two possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- A. Call a separate child cloud flow to perform the desktop flow a second time.

- B. Create a duplicate action for the desktop flow to run after the first desktop flow.

- C. Put the desktop flow action into a Do until loop. Run until the desktop flow is successful.

- D. Create a duplicate action for the desktop flow and configure the duplicate action to run if the first desktop flow action fails.

Answer:

cd

Question 2

You have an automation solution that uses a desktop flow. The flow reads data from a file that is stored on a users machine and writes the data to an application. You import the solution to an environment that is connected to another users machine.

The user reports that the flow fails. An alert indicates that the path to a file does not exist. You confirm that the file present on the users desktop.

You need to resolve the issue.

What should you do?

- A. Use the Get Windows environment variable action to read the USERNAME environment variable and use the value in the path to the users desktop.

- B. Change access rights for the file to allow read operations for the PAD process.

- C. Move the file to the users OneDrive storage.

- D. Change the access rights for the file to allow read operations for the current user.

Answer:

a

Question 3

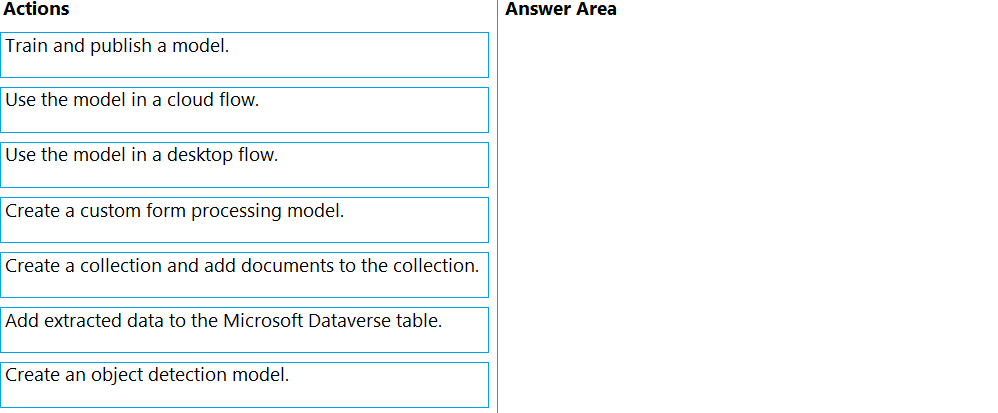

DRAG DROP You are designing an automation that processes information from documents attached to emails.

You need to extract data from the attachments and insert the data into a custom Microsoft Dataverse table.

Which five actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

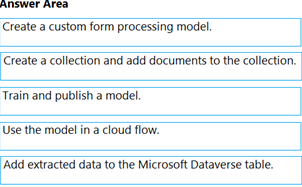

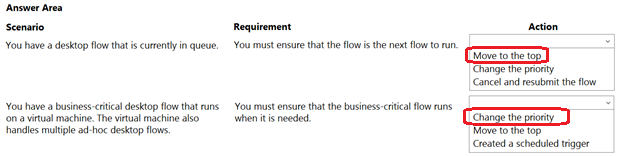

Question 4

HOTSPOT

You develop automation solutions for a company.

You need to implement actions to meet the companys requirements.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Question 5

DRAG DROP

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

First Up Consultants is a professional services organization headquartered in Europe, with offices in North America. The company supports small- to medium-sized and enterprise organizations with a range of information technology, project management, change management, and finance management consultancy needs. The organization employs 500 full-time consultants and engages with over 1,000 external contractors to support the delivery of its various projects.

Current environment

The company has been using Microsoft Power Platform for several years and currently has the following implemented:

A model-driven app named Project Planning Application that is used by the project management office (PMO) team within the company to plan, schedule and collate information for each client project. The application supports the following functionality areas:

o Storage for project-level information, such as start date, end date and client data.

o A series of inputs to capture detailed information for statements of work as part of a standard document format. This information is manually entered by the PMO team.

o Information regarding internal and external consultants is stored within a custom table called Resources.

o Information within the Resources table regarding full-time consultants is typically populated manually by the PMO team. For external consultants, the company regularly attends industry events and collates business cards for potential new employees or external contractors. Information regarding these individuals is then manually entered into the application. The PMO team then asks suitable candidates to complete an application form in Microsoft Word standard format and upload it via a secure URL. Again, the PMO team then manually enters the data into Project Planning Application.

A canvas app named Time Entry Application is used by the employees and external contractors to capture the time worked on projects. The application has been configured with the following defined controls:

o dpStartDate: A date picker control to indicate the start date of the time entry.

o dpEndDate: A date picker control to indicate the end date of the time entry.

o inptDescription: A control used to indicate the type of activity and project worked on.

A mobile app stores the current user's email address as part of a variable called varUserName.

The company uses Microsoft SharePoint on premises to store all sensitive documents. Company policy mandates that all client-related documents are stored within this environment only.

The company uses SAP as its back-end accounting system. The company maintains separate SAP systems in each legal jurisdiction where it is based. The system is relied upon for the following critical business processes:

External contractors working on a project send their invoices to a dedicated mailbox that is monitored by the company's accounting team. The accounting team must then manually process these invoices into SAP at the end of each month. Due to the number of external contractors, hundreds of invoices must be processes monthly.

Basic API access for the SAP system is provided via a mixture of native application APIs and a middleware Simple Object Access Protocol (SOAP) API hosted on premises. The middleware API supports the ability to post time entries against the relevant projects in any SAP system by specifying the system and client ID as a query parameter in the URL. The middleware API was developed several years ago, and the source code is no longer available. Data is returned in XML format, which can then be analyzed further.

When a project enters the closure phase, members of the PMO team need to navigate to SAP, enter some details, and then capture information from a PDF that is generated and opened on the screen, such as the final settlement amount. This information is then manually entered into Project Planning Application.

The company maintains a separate system containing detailed profile information regarding internal employees. The PMO team currently manually enters information from this system into the Resources table. Developers in the company have created a modern REST API for this system, which is actively maintained. The system contains highly sensitive personal information (PI) regarding each employee.

The company has several on-premises Windows environments that it has identified as suitable for usage because they exist within the same physical network as SAP and the middleware API. These environments must be patched regularly, and all activities targeting these environments must be automated.

Project Planning Application

Rather than manually populating the statement of work information, users should place it in a SharePoint folder for this information to be extracted and mapped to the correct inputs.

A new automation is required to integrate with the profile information system. Because the company plans to consume this data in several ways, a streamlined mechanism for working with the API is required to improve reusability.

An automation is required to handle the project closure steps in SAP and to store the relevant information from SAP into the app.

Once a new candidate uploads a completed application form, information from the form should be copied automatically into Project Planning Application.

Time Entry Application

Time Entry Application needs to be extended to integrate alongside SAP, ensuring postings for time entries are processed successfully. When a time entry is submitted, the entry should be posted automatically to SAP.

Time entries must always be submitted with relevant text that indicates the type of activity and project worked on.

The automation should be able to detect and handle any errors that occur when posting individual time entries.

Invoice Processing

Invoices sent to the accounts mailbox must be processed automatically and created as invoices within SAP.

General

Development efforts should be avoided or mitigated when there is native functionality already available.

Reusability of components is desired to assist citizen developers in creating any solutions in future.

All automation activities should run without disruption during an outage or a patching cycle.

Use of username and password credentials should be avoided.

Automations should not rely on human intervention to execute.

Use of public cloud file services should be restricted.

Where possible, JSON should be the preferred format when transferring data.

Issues

Users within the PMO team report that it takes many hours to put the data from each business card into the system.

You create the automation to process the time entries, called Submit Time Entry, and add it to the application. Users report issues with the formula used to connect to the automation.

During a monthly patch cycle, IT support team members cannot identify the correct steps to patch the machine without disrupting any automation.

While performing an audit of the new solution during the test phase, the company's information security team identifies that users can freely save confidential documents to OneDrive for Business.

When creating the automation for the project closure process, you discover that some of the required information needs to be exported via the SAP GUI and extracted from a comma-separated value (CSV) file.

When building the automation for the profile information system, you identify that all requests into the API will fail unless the following HTTP header value is specified:

o Accept: application/json

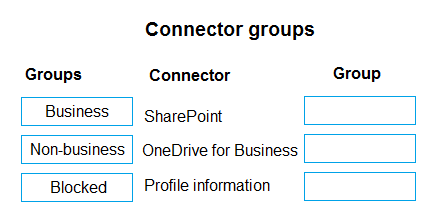

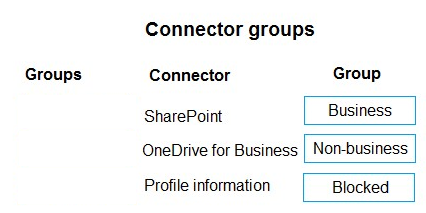

You need to determine the correct group for each connector used in the automations.

Which groups should you use? To answer, move the appropriate connector to the correct group. You may use each group once, more than once, or not at all.

You may need to move the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Question 6

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

First Up Consultants is a professional services organization headquartered in Europe, with offices in North America. The company supports small- to medium-sized and enterprise organizations with a range of information technology, project management, change management, and finance management consultancy needs. The organization employs 500 full-time consultants and engages with over 1,000 external contractors to support the delivery of its various projects.

Current environment

The company has been using Microsoft Power Platform for several years and currently has the following implemented:

A model-driven app named Project Planning Application that is used by the project management office (PMO) team within the company to plan, schedule and collate information for each client project. The application supports the following functionality areas: o Storage for project-level information, such as start date, end date and client data. o A series of inputs to capture detailed information for statements of work as part of a standard document format. This information is manually entered by the PMO team. o Information regarding internal and external consultants is stored within a custom table called Resources. o Information within the Resources table regarding full-time consultants is typically populated manually by the PMO team. For external consultants, the company regularly attends industry events and collates business cards for potential new employees or external contractors. Information regarding these individuals is then manually entered into the application. The PMO team then asks suitable candidates to complete an application form in Microsoft Word standard format and upload it via a secure URL. Again, the PMO team then manually enters the data into Project Planning Application.

A canvas app named Time Entry Application is used by the employees and external contractors to capture the time worked on projects. The application has been configured with the following defined controls: o dpStartDate: A date picker control to indicate the start date of the time entry. o dpEndDate: A date picker control to indicate the end date of the time entry. o inptDescription: A control used to indicate the type of activity and project worked on.

A mobile app stores the current user's email address as part of a variable called varUserName.

The company uses Microsoft SharePoint on premises to store all sensitive documents. Company policy mandates that all client-related documents are stored within this environment only.

The company uses SAP as its back-end accounting system. The company maintains separate SAP systems in each legal jurisdiction where it is based. The system is relied upon for the following critical business processes:

External contractors working on a project send their invoices to a dedicated mailbox that is monitored by the company's accounting team. The accounting team must then manually process these invoices into SAP at the end of each month. Due to the number of external contractors, hundreds of invoices must be processes monthly.

Basic API access for the SAP system is provided via a mixture of native application APIs and a middleware Simple Object Access Protocol (SOAP) API hosted on premises. The middleware API supports the ability to post time entries against the relevant projects in any SAP system by specifying the system and client ID as a query parameter in the URL. The middleware API was developed several years ago, and the source code is no longer available. Data is returned in XML format, which can then be analyzed further.

When a project enters the closure phase, members of the PMO team need to navigate to SAP, enter some details, and then capture information from a PDF that is generated and opened on the screen, such as the final settlement amount. This information is then manually entered into Project Planning Application.

The company maintains a separate system containing detailed profile information regarding internal employees. The PMO team currently manually enters information from this system into the Resources table. Developers in the company have created a modern REST API for this system, which is actively maintained. The system contains highly sensitive personal information (PI) regarding each employee.

The company has several on-premises Windows environments that it has identified as suitable for usage because they exist within the same physical network as SAP and the middleware API. These environments must be patched regularly, and all activities targeting these environments must be automated.

Project Planning Application

Rather than manually populating the statement of work information, users should place it in a SharePoint folder for this information to be extracted and mapped to the correct inputs.

A new automation is required to integrate with the profile information system. Because the company plans to consume this data in several ways, a streamlined mechanism for working with the API is required to improve reusability.

An automation is required to handle the project closure steps in SAP and to store the relevant information from SAP into the app.

Once a new candidate uploads a completed application form, information from the form should be copied automatically into Project Planning Application.

Time Entry Application

Time Entry Application needs to be extended to integrate alongside SAP, ensuring postings for time entries are processed successfully. When a time entry is submitted, the entry should be posted automatically to SAP.

Time entries must always be submitted with relevant text that indicates the type of activity and project worked on.

The automation should be able to detect and handle any errors that occur when posting individual time entries.

Invoice Processing

Invoices sent to the accounts mailbox must be processed automatically and created as invoices within SAP.

General

Development efforts should be avoided or mitigated when there is native functionality already available.

Reusability of components is desired to assist citizen developers in creating any solutions in future.

All automation activities should run without disruption during an outage or a patching cycle.

Use of username and password credentials should be avoided.

Automations should not rely on human intervention to execute.

Use of public cloud file services should be restricted.

Where possible, JSON should be the preferred format when transferring data.

Issues

Users within the PMO team report that it takes many hours to put the data from each business card into the system.

You create the automation to process the time entries, called Submit Time Entry, and add it to the application. Users report issues with the formula used to connect to the automation.

During a monthly patch cycle, IT support team members cannot identify the correct steps to patch the machine without disrupting any automation.

While performing an audit of the new solution during the test phase, the company's information security team identifies that users can freely save confidential documents to OneDrive for Business.

When creating the automation for the project closure process, you discover that some of the required information needs to be exported via the SAP GUI and extracted from a comma-separated value (CSV) file.

When building the automation for the profile information system, you identify that all requests into the API will fail unless the following HTTP header value is specified: o Accept: application/json

You need to determine the formula to use for the time entry posting automation.

Which formula should you use?

- A. Submit Time Entry.Run(dpStartDate.InputTextPlaceholder, dpEndDate.InputTextPlaceholder, inptDescription.Text)

- B. Submit Time Entry.Run(dpStartDate.SelectedDate, dpEndDate.SelectedDate, inptDescription.Text)

- C. Submit Time Entry.Run(dpStartDate.InputTextPlaceholder, dpEndDate.InputTextPlaceholder, varUserName)

- D. Submit Time Entry.Run(dpStartDate.SelectedDate, dpEndDate.SelectedDate, varUserName)

Answer:

b

Question 7

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

Woodgrove Bank is a large, member-owned bank in the United States Woodgrove Bank provides financial products with low customer fees and direct customer service.

Woodgrove Bank has 177 branches across the United States with 5.000 branch staff and supervisors serving over 750,000 members. The primary languages used by most members include English and Spanish when interacting with customer service representatives. The Woodgrove Bank headquarters is in California and has 450 office workers. The office workers include financial advisors, customer service representatives, finance clerks, and IT personnel.

Current environment. Bank applications

An application named Banker Desktop. The branch employees use this desktop app to review business transactions and to perform core banking updates.

An application named Member Management System. This application is a custom customer relationship management (CRM) that integrates with other systems by using an API interface.

An application named Fraud Finder. This application is a mission-critical, fraud management application that runs on the employees' desktops. The bank has experienced challenges integrating the application with other systems and is expensive to support.

SharePoint Online provides an employee intranet as well as a member document management system that includes polices, contracts, statements, and financial planning documents.

Microsoft Excel is used to perform calculations and run macros. Branch employees may have multiple Microsoft Excel workbooks open on their desktop simultaneously.

Current environment. Bank devices

All supervisors are provided with a mobile device that can be used to access company email and respond to approval requests.

All branch employees and supervisors are provided with a Windows workstation.

Requirements. New member enrollment

Woodgrove Bank requires new members to sign up online to start the onboarding process. The bank requires some manual steps to be performed during the onboarding process.

First step:

Members complete an online Woodgrove Bank document and email the PDF attachment to the banks shared mailbox for processing.

Second step:

Members are asked to provide secondary identification to their local branch, such as a utility bill, to validate their physical address.

Branch staff scan the secondary identification in English or Spanish using optical character recognition (OCR) technology.

Third step:

A branch supervisor approves the members application from their mobile device.

Only supervisors are authorized to complete application approvals.

Fourth step:

Data that is received from applications must be validated to ensure it adheres to the bank's naming standards.

The bank has the following requirements for the members data:

New members must be enrolled by using the document automation solution.

Member data is subject to regulatory requirements and should not be used for non-business purposes.

A desktop workflow is required to retrieve member information from the Member Management System on-demand or by using a cloud flow.

Requirements. Bank fees

The process for calculating bank fees include:

using a shared Excel fee workbook with an embedded macro, and

an attended desktop flow that is required to automate the fee workbook process. The flow should open an Excel workbook and calculate the members' fees based on the number of products.

Requirements. Fraud detection

The bank has the following requirements to minimize fraud:

Branch employees must use the Fraud Finder application during onboarding to validate a members identity with other third-party systems.

Branch employees must be able to search for a member in the Fraud Finder application by using a member's full name or physical address.

If fraudulent activity is identified, a notification with member details must be sent to the internal fraud investigation team.

Requirements. Technical

The bank has the following technical requirements:

Flows The Fraud Finder application uses a custom connector with Power Automate to run fraud checks.

The application approval process triggers a cloud flow, then starts an attended desktop flow on the branch employee s workstation and completes the approval.

The banker desktop flow runs using the default priority

An IT administrator is the co-owner of the banker desktop flow.

The IT department will be installing the required OCR language packs.

The Extract text with OCR action is used to import the members' secondary identification

Member Management System A secure Azure function requires a subscription key to retrieve members information.

Production flows must connect to the Member Management System with a custom connector. The connector uses the Azure function to perform programmatic retrievals, creates, and updates.

The host URL has been added to the custom connector as a new pattern.

A tenant-level Microsoft Power Platform data loss prevention (DLP) policy has been created to manage the production environment.

A developer creates a desktop flow to automate data entry into a test instance of the Member Management System.

A developer creates an on-demand attended desktop flow to connect to a data validation site and retrieve the most current information for a member.

Banker desktop application A banker desktop flow is required to update the core banking system with other systems.

When a transaction is complete, the branch employee submits the request by using a submit button.

After submitting the request, an instant cloud flow calls an unattended desktop flow to complete the core banking update.

The banker desktop flow must be prioritized for all future transactions.

Deployment & testing Development data must be confined to the development environment until the data is ready for user acceptance testing (UAT).

The production environment in SharePoint Online must connect to the development instance of the Member Management System.

Developers must be able to deploy software every two weeks during a scheduled maintenance window.

The banker desktop flow must continue to run during any planned maintenance.

The fraud custom connector requires a policy operation named EscalateForFraud with a parameter that uses the members' full name in the request.

Scalability The bank requires a machine group to distribute the automation workload and to optimize productivity.

The IT administrator needs to silently register 20 new machines to Power Automate and then add them to the machine group.

Security The IT administrator uses a service principal account for machine connection.

The IT administrator has the Desktop Flow Machine Owner role.

Issues

A branch staff member reports the document automation solution is not processing new members data and emails are not being sent for approvals.

An IT administrator reports that the banker desktop flow has become unresponsive from data that is queued in another flow.

Code

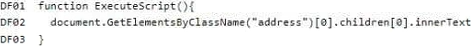

A Power Automate developer created the following script for the Member Management System desktop flow:

You need to create the custom connector that will be used to retrieve member information.

Which authentication option should you use?

- A. API key

- B. No authentication

- C. OAuth 2.0

- D. Basic

- E. Windows

Answer:

c

Question 8

You need to package the automations.

What should you do?

- A. Show dependencies within the solution.

- B. Remove unmanaged layers.

- C. Add existing components to the solution.

- D. Add required components to each item within the solution.

Answer:

d

Question 9

HOTSPOT

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

Woodgrove Bank is a large, member-owned bank in the United States Woodgrove Bank provides financial products with low customer fees and direct customer service.

Woodgrove Bank has 177 branches across the United States with 5.000 branch staff and supervisors serving over 750,000 members. The primary languages used by most members include English and Spanish when interacting with customer service representatives. The Woodgrove Bank headquarters is in California and has 450 office workers. The office workers include financial advisors, customer service representatives, finance clerks, and IT personnel.

Current environment. Bank applications

An application named Banker Desktop. The branch employees use this desktop app to review business transactions and to perform core banking updates.

An application named Member Management System. This application is a custom customer relationship management (CRM) that integrates with other systems by using an API interface.

An application named Fraud Finder. This application is a mission-critical, fraud management application that runs on the employees' desktops. The bank has experienced challenges integrating the application with other systems and is expensive to support.

SharePoint Online provides an employee intranet as well as a member document management system that includes polices, contracts, statements, and financial planning documents.

Microsoft Excel is used to perform calculations and run macros. Branch employees may have multiple Microsoft Excel workbooks open on their desktop simultaneously.

Current environment. Bank devices

All supervisors are provided with a mobile device that can be used to access company email and respond to approval requests.

All branch employees and supervisors are provided with a Windows workstation.

Requirements. New member enrollment

Woodgrove Bank requires new members to sign up online to start the onboarding process. The bank requires some manual steps to be performed during the onboarding process.

First step:

Members complete an online Woodgrove Bank document and email the PDF attachment to the banks shared mailbox for processing.

Second step:

Members are asked to provide secondary identification to their local branch, such as a utility bill, to validate their physical address.

Branch staff scan the secondary identification in English or Spanish using optical character recognition (OCR) technology.

Third step:

A branch supervisor approves the members application from their mobile device.

Only supervisors are authorized to complete application approvals.

Fourth step:

Data that is received from applications must be validated to ensure it adheres to the bank's naming standards.

The bank has the following requirements for the members data:

New members must be enrolled by using the document automation solution.

Member data is subject to regulatory requirements and should not be used for non-business purposes.

A desktop workflow is required to retrieve member information from the Member Management System on-demand or by using a cloud flow.

Requirements. Bank fees

The process for calculating bank fees include:

using a shared Excel fee workbook with an embedded macro, and

an attended desktop flow that is required to automate the fee workbook process. The flow should open an Excel workbook and calculate the members' fees based on the number of products.

Requirements. Fraud detection

The bank has the following requirements to minimize fraud:

Branch employees must use the Fraud Finder application during onboarding to validate a members identity with other third-party systems.

Branch employees must be able to search for a member in the Fraud Finder application by using a member's full name or physical address.

If fraudulent activity is identified, a notification with member details must be sent to the internal fraud investigation team.

Requirements. Technical

The bank has the following technical requirements:

Flows

The Fraud Finder application uses a custom connector with Power Automate to run fraud checks.

The application approval process triggers a cloud flow, then starts an attended desktop flow on the branch employee s workstation and completes the approval.

The banker desktop flow runs using the default priority

An IT administrator is the co-owner of the banker desktop flow.

The IT department will be installing the required OCR language packs.

The Extract text with OCR action is used to import the members' secondary identification

Member Management System

A secure Azure function requires a subscription key to retrieve members information.

Production flows must connect to the Member Management System with a custom connector. The connector uses the Azure function to perform programmatic retrievals, creates, and updates.

The host URL has been added to the custom connector as a new pattern.

A tenant-level Microsoft Power Platform data loss prevention (DLP) policy has been created to manage the production environment.

A developer creates a desktop flow to automate data entry into a test instance of the Member Management System.

A developer creates an on-demand attended desktop flow to connect to a data validation site and retrieve the most current information for a member.

Banker desktop application

A banker desktop flow is required to update the core banking system with other systems.

When a transaction is complete, the branch employee submits the request by using a submit button.

After submitting the request, an instant cloud flow calls an unattended desktop flow to complete the core banking update.

The banker desktop flow must be prioritized for all future transactions.

Deployment & testing

Development data must be confined to the development environment until the data is ready for user acceptance testing (UAT).

The production environment in SharePoint Online must connect to the development instance of the Member Management System.

Developers must be able to deploy software every two weeks during a scheduled maintenance window.

The banker desktop flow must continue to run during any planned maintenance.

The fraud custom connector requires a policy operation named EscalateForFraud with a parameter that uses the members' full name in the request.

Scalability

The bank requires a machine group to distribute the automation workload and to optimize

productivity.

The IT administrator needs to silently register 20 new machines to Power Automate and then add them to the machine group.

Security

The IT administrator uses a service principal account for machine connection.

The IT administrator has the Desktop Flow Machine Owner role.

Issues

A branch staff member reports the document automation solution is not processing new members data and emails are not being sent for approvals.

An IT administrator reports that the banker desktop flow has become unresponsive from data that is queued in another flow.

Code

A Power Automate developer created the following script for the Member Management System desktop flow:

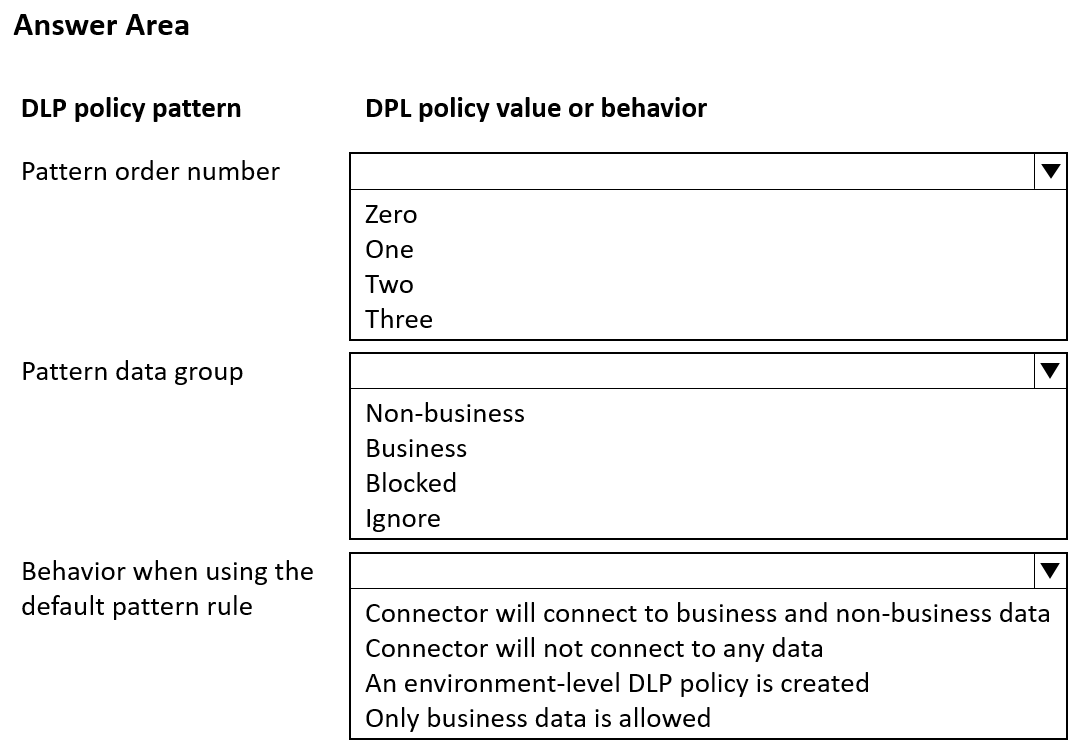

You need to identify the values or the behavior for the pattern added to the tenant Microsoft Power Platform data loss prevention (DIP) policy.

What should you identify? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Question 10

HOTSPOT

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

City Power and Light is one of the biggest energy companies in North America. They extract, produce and transport oil. The company has more than 50 offices and 100 oil extraction facilities throughout the United States, Canada, and Mexico. They use railways, trucks, and pipelines to move oil and gas from their facilities.

The company provides the following services:

Produce oil from oil sands safely, responsibly, and reliably.

Refine crude oil into high-quality products.

Develop and manage wind power facilities.

Transport oil to different countries/regions.

City Power and Light uses various Microsoft software products to manage its daily actives and run its machine-critical applications.

Requirements

ManagePipelineMaintenanceTasks

A user named Admin1 creates a cloud flow named ManagePipelineMaintenanceTasks. Admin1 applies a data loss prevention (DLP) policy to the flow. Admin1 shares the flow with a user named PipelineManager1 as co-owner. You must determine the actions that PipelineManager1 can perform.

You create a cloud MaintenanceScheduler flow that uses a desktop flow. The desktop flow connects to third-party services to fetch information. You must not permit the desktop flow to run for more than 20 minutes.

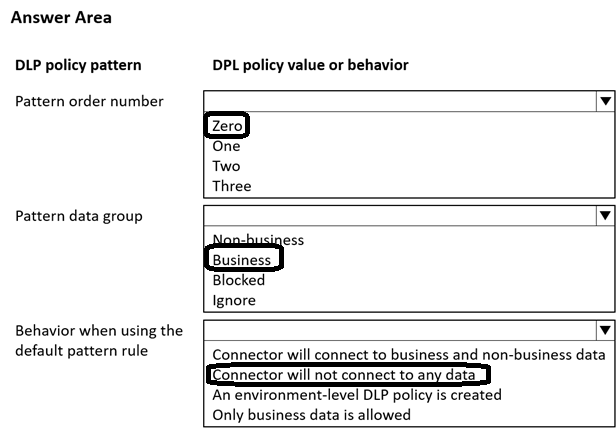

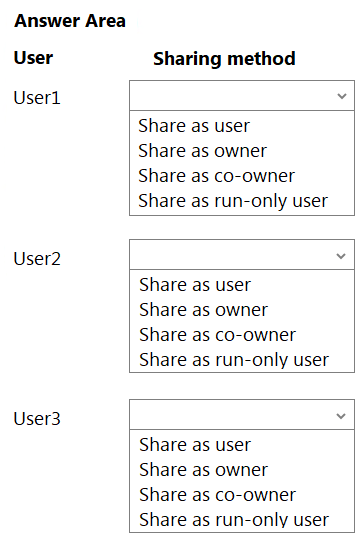

You must configure sharing for MaintenanceScheduler to meet the following requirements:

User1 must be able to work with you to modify the desktop flow.

User2 must be able to access and review the run history for the cloud flow.

You must grant User3 permissions to run but not modify the desktop flow.

ERPDataOperations flow

City Power and light uses an enterprise resource planning (ERP) system. The ERP system does not have an API.

Each day the company receives an email that contains an attachment. The attachment lists orders from the companys rail transportation partners. You must create an automation solution that reads the contents of the email and writes records to the ERP system. The solution must pass credential from a cloud flow to a desktop flow.

RailStatusUpdater

City Power and Light actively monitors all products in transit. You must create a flow named RailStatusUpdater that manages communications with railways that transport the companys products. RailStatusUpdater includes five desktop flow actions.

You must run the desktop flows in attended mode during testing. You must run the desktop flows in unattended mode after you deploy the solution. You must minimize administrative efforts.

Packaging

All flow automations must be created in a solution. All required components to support the flows must be included in the solution.

Issues

ProductionMonitor flow

You create a cloud flow named ProductionMonitor which uses the Manually trigger a flow trigger. You plan to trigger ProductionMonitor from a cloud flow named ProdManager.

You add a Run a Child flow action in ProdManager to trigger ProductionMonitor. When you attempt to save ProdManager the following error message displays:

Request to XRM API failed with error: 'Message:Flow client error returned with status code Bad request and details (error:

{code:ChildFlowUnsupportedForinvokerConnections, message: The workflow with id 8d3bcde7-7e98-eb11-b1ac-000d3a32d53f, named FlowA cannot be used as a child workflow because child workflows only support embedded connections. }}Code 0x80060467 InnerError.'

CapacityPlanning flow

Developers within the company use cloud flows to access data from an on-premises capacity planning system.

You observe significant increases to the volume of traffic that the on-premises data gateway processes each day. You must minimize gateway failures.

DataCollector flow

You have a desktop flow that interacts with a web form. The flow must write data to several fields on the form.

You are testing the flow. The flow fails when attempting to write data to any field on the web form.

RailStatusUpdater flow

The RailStatusUpdater flow occasionally fails due to machine connection errors. You can usually get the desktop flow to complete by resubmitting the cloud flow run. You must automate the retry process to ensure that you do not need to manually resubmit the cloud flow when machine connection errors occur.

You need to configure sharing for MaintenanceScheduler.

Which sharing methods should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer: