Question 1

Database XYZ has the data_retention_time_in_days parameter set to 7 days and table XYZ.public.ABC has the data_retention_time_in_days set to 10 days.

A Developer accidentally dropped the database containing this single table 8 days ago and just discovered the mistake.

How can the table be recovered?

- A. undrop database xyz;

- B. create table abc_restore as select * from xyz.public.abc at (offset => -60*60*24*8);

- C. create table abc_restore clone xyz.public.abc at (offset => -3600*24*8);

- D. Create a Snowflake Support case to restore the database and table from Fail-safe.

Answer:

a

Comments

Question 2

Which Snowflake feature facilitates access to external API services such as geocoders, data transformation, machine learning models, and other custom code?

- A. Security integration

- B. External tables

- C. External functions

- D. Java User-Defined Functions (UDFs)

Answer:

c

Comments

Question 3

While running an external function, the following error message is received:

Error: Function received the wrong number of rows

What is causing this to occur?

- A. External functions do not support multiple rows.

- B. Nested arrays are not supported in the JSON response.

- C. The JSON returned by the remote service is not constructed correctly.

- D. The return message did not produce the same number of rows that it received.

Answer:

d

Comments

Question 4

A Data Engineer would like to define a file structure for loading and unloading data.

Where can the file structure be defined? (Choose three.)

- A. COPY command

- B. MERGE command

- C. FILE FORMAT object

- D. PIPE object

- E. STAGE object

- F. INSERT command

Answer:

ace

Comments

Question 5

A Data Engineer enables a result cache at the session level with the following command:

ALTER SESSION SET USE_CACHED_RESULT = TRUE;

The Engineer then runs the following SELECT query twice without delay:

SELECT *

FROM SNOWFLAKE_SAMPLE_DATA.TPCH_SF1.CUSTOMER

SAMPLE(10) SEED (99);

The underlying table does not change between executions.

What are the results of both runs?

- A. The first and second run returned the same results, because SAMPLE is deterministic.

- B. The first and second run returned the same results, because the specific SEED value was provided.

- C. The first and second run returned different results, because the query is evaluated each time it is run.

- D. The first and second run returned different results, because the query uses * instead of an explicit column list.

Answer:

b

Comments

Question 6

A Data Engineer has developed a dashboard that will issue the same SQL select clause to Snowflake every 12 hours.

How long will Snowflake use the persisted query results from the result cache, provided that the underlying data has not changed?

- A. 12 hours

- B. 24 hours

- C. 14 days

- D. 31 days

Answer:

b

Comments

Question 7

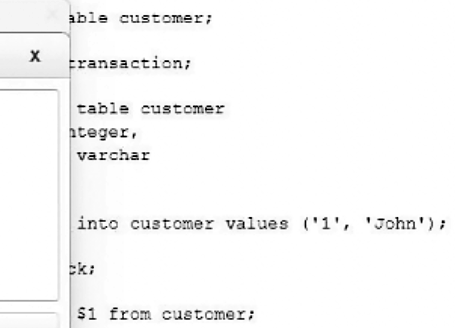

The following code is executed in a Snowflake environment with the default settings:

What will be the result of the select statement?

- A. SQL compilation error: Object 'CUSTOMER' does not exist or is not authorized.

- B. John

- C. 1

- D. 1John

Answer:

a

Comments

Question 8

A CSV file, around 1 TB in size, is generated daily on an on-premise server. A corresponding table, internal stage, and file format have already been created in Snowflake to facilitate the data loading process.

How can the process of bringing the CSV file into Snowflake be automated using the LEAST amount of operational overhead?

- A. Create a task in Snowflake that executes once a day and runs a COPY INTO statement that references the internal stage. The internal stage will read the files directly from the on-premise server and copy the newest file into the table from the on-premise server to the Snowflake table.

- B. On the on-premise server, schedule a SQL file to run using SnowSQL that executes a PUT to push a specific file to the internal stage. Create a task that executes once a day in Snowflake and runs a COPY INTO statement that references the internal stage. Schedule the task to start after the file lands in the internal stage.

- C. On the on-premise server, schedule a SQL file to run using SnowSQL that executes a PUT to push a specific file to the internal stage. Create a pipe that runs a COPY INTO statement that references the internal stage. Snowpipe auto-ingest will automatically load the file from the internal stage when the new file lands in the internal stage.

- D. On the on-premise server, schedule a Python file that uses the Snowpark Python library. The Python script will read the CSV data into a DataFrame and generate an INSERT INTO statement that will directly load into the table. The script will bypass the need to move a file into an internal stage.

Answer:

b

Comments

Question 9

A Data Engineer executes a complex query and wants to make use of Snowflakes query results caching capabilities to reuse the results.

Which conditions must be met? (Choose three.)

- A. The results must be reused within 72 hours.

- B. The query must be executed using the same virtual warehouse.

- C. The USED_CACHED_RESULT parameter must be included in the query.

- D. The table structure contributing to the query result cannot have changed.

- E. The new query must have the same syntax as the previously executed query.

- F. The micro-partitions cannot have changed due to changes to other data in the table.

Answer:

edf

Comments

Question 10

A Data Engineer wants to create a new development database (DEV) as a clone of the permanent production database (PROD). There is a requirement to disable Fail-safe for all tables.

Which command will meet these requirements?

- A. CREATE DATABASE DEV -CLONE PROD -FAIL_SAFE = FALSE;

- B. CREATE DATABASE DEV -CLONE PROD;

- C. CREATE TRANSIENT DATABASE DEV -CLONE PROD;

- D. CREATE DATABASE DEV -CLONE PROD -DATA_RETENTION_TIME_IN DAYS = 0;

Answer:

c

Comments

Page 1 out of 6

Viewing questions 1-10 out of 65

page 2