Splunk splk-3003 practice test

Splunk Core Certified Consultant Exam

Last exam update: Mar 26 ,2025

Question 1

A customer has 30 indexers in an indexer cluster configuration and two search heads. They are

working on writing SPL search for a particular use-case, but are concerned that it takes too long to

run for short time durations.

How can the Search Job Inspector capabilities be used to help validate and understand the customer

concerns?

- A. Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed.

- B. Search Job Inspector provides a Search Health Check capability that provides an optimized SPL query the customer should try instead.

- C. Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead.

- D. The customer is using the transaction SPL search command, which is known to be slow.

Answer:

A

Question 2

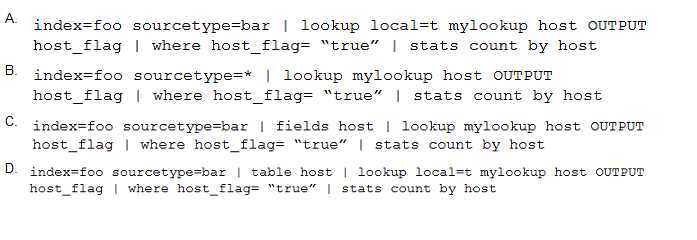

Which of the following is the most efficient search?

- A. Option A

- B. Option B

- C. Option C

- D. Option D

Answer:

C

Question 3

In a large cloud customer environment with many (>100) dynamically created endpoint systems,

each with a UF already deployed, what is the best approach for associating these systems with an

appropriate serverclass on the deployment server?

- A. Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute.

- B. Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in serverclass.conf.

- C. Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoints local/deploymentclient.conf which can be matched by whitelist in serverclass.conf.

- D. Using an installation bootstrap script run a CLI command to assign a clientName setting and permit serverclass.conf whitelist simplification.

Answer:

C

Question 4

The Splunk Validated Architectures (SVAs) document provides a series of approved Splunk

topologies. Which statement accurately describes how it should be used by a customer?

- A. Customer should look at the category tables, pick the highest number that their budget permits, then select this design topology as the chosen design.

- B. Customers should identify their requirements, provisionally choose an approved design that meets them, then consider design principles and best practices to come to an informed design decision.

- C. Using the guided requirements gathering in the SVAs document, choose a topology that suits requirements, and be sure not to deviate from the specified design.

- D. Choose an SVA topology code that includes Search Head and Indexer Clustering because it offers the highest level of resilience.

Answer:

B

Reference:

https://www.splunk.com/en_us/blog/tips-and-tricks/splunk-validated-architectures.html

Question 5

Which of the following server roles should be configured for a host which indexes its internal logs

locally?

- A. Cluster master

- B. Indexer

- C. Monitoring Console (MC)

- D. Search head

Answer:

B

Reference:

https://community.splunk.com/t5/Deployment-Architecture/How-to-identify-Splunk-

Instance-role-by- internal-logs/m-p/365555

Question 6

When using SAML, where does user authentication occur?

- A. Splunk generates a SAML assertion that authenticates the user.

- B. The Service Provider (SP) decodes the SAML request and authenticates the user.

- C. The Identity Provider (IDP) decodes the SAML request and authenticates the user.

- D. The Service Provider (SP) generates a SAML assertion that authenticates the user.

Answer:

A

Question 7

A customer is migrating their existing Splunk Indexer from an old set of hardware to a new set of

indexers. What is the earliest method to migrate the system?

- A. 1. Add new indexers to the cluster as peers, in the same site (if needed). 2. Ensure new indexers receive common configuration. 3. Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to new hardware. 4. Remove all the old indexers from the CMs list.

- B. 1. Add new indexers to the cluster as peers, to a new site. 2. Ensure new indexers receive common configuration from the CM. 3. Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to new hardware. 4. Remove all the old indexers from the CMs list.

- C. 1. Add new indexers to the cluster as peers, in the same site. 2. Update the replication factor by +1 to Instruct the cluster to start replicating to new peers. 3. Allow time for CM to fix/migrate buckets to new hardware. 4. Remove all the old indexers from the CMs list.

- D. 1. Add new indexers to the cluster as new site. 2. Update cluster master (CM) server.conf to include the new available site. 3. Allow time for CM to fix/migrate buckets to new hardware. 4. Remove the old indexers from the CMs list.

Answer:

B

Question 8

When utilizing a subsearch within a Splunk SPL search query, which of the following statements is

accurate?

- A. Subsearches have to be initiated with the | subsearch command.

- B. Subsearches can only be utilized with | inputlookup command.

- C. Subsearches have a default result output limit of 10000.

- D. There are no specific limitations when using subsearches.

Answer:

C

Reference:

https://docs.splunk.com/Documentation/Splunk/8.0.6/Search/Aboutsubsearches#:~:text=By%

20default%2C%20subsearches%20return%20a,will%20timeout%20before%20it%20completes

Question 9

A customer is using both internal Splunk authentication and LDAP for user management.

If a username exists in both $SPLUNK_HOME/etc/passwd and LDAP, which of the following

statements is accurate?

- A. The internal Splunk authentication will take precedence.

- B. Authentication will only succeed if the password is the same in both systems.

- C. The LDAP user account will take precedence.

- D. Splunk will error as it does not support overlapping usernames

Answer:

A

Question 10

When setting up a multisite search head and indexer cluster, which nodes are required to declare site

membership?

- A. Search head cluster members, deployer, indexers, cluster master

- B. Search head cluster members, deployment server, deployer, indexers, cluster master

- C. All splunk nodes, including forwarders, must declare site membership

- D. Search head cluster members, indexers, cluster master

Answer:

D

Reference:

https://docs.splunk.com/Documentation/Splunk/8.1.0/DistSearch/SHCandindexercluster

Question 11

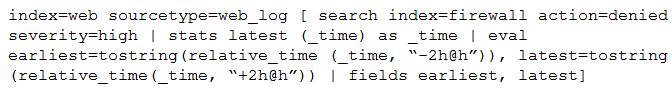

Consider the search shown below.

What is this searchs intended function?

- A. To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index.

- B. To find all the denied, high severity events in the firewall index, and use those events to further search for lateral movement within the web index.

- C. To return all the web_log events from the web index that occur two hours before and after all high severity, denied events found in the firewall index.

- D. To search the firewall index for web logs that have been denied and are of high severity.

Answer:

C

Question 12

Which of the following is the most efficient search?

- A. index=www status=200 uri=/cart/checkout | append [search index = sales] | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

- B. (index=www status=200 uri=/cart/checkout) OR (index=sales) | stats count, sum (revenue) as total_revenue by session_id | table total_revenue session_id

- C. index=www | append [search index = sales] | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

- D. (index=www) OR (index=sales) | search (index=www status=200 uri=/cart/checkout) OR (index=sales) | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

Answer:

B

Question 13

A customer has three users and is planning to ingest 250GB of data per day. They are concerned with

search uptime, can tolerate up to a two-hour downtime for the search tier, and want advice on single

search head versus a search head cluster. (SHC).

Which recommendation is the most appropriate?

- A. The customer should deploy two active search heads behind a load balancer to support HA.

- B. The customer should deploy a SHC with a single member for HA; more members can be added later.

- C. The customer should deploy a SHC, because it will be required to support the high volume of data.

- D. The customer should deploy a single search head with a warm standby search head and an rsync process to synchronize configurations.

Answer:

D

Question 14

A Splunk Index cluster is being installed and the indexers need to be configured with a license master.

After the customer provides the name of the license master, what is the next step?

- A. Enter the license master configuration via Splunk web on each indexer before disabling Splunk web.

- B. Update /opt/splunk/etc/master-apps/_cluster/default/server.conf on the cluster master and apply a cluster bundle.

- C. Update the Splunk PS base config license app and copy to each indexer.

- D. Update the Splunk PS base config license app and deploy via the cluster master.

Answer:

C

Question 15

When can the Search Job Inspector be used to debug searches?

- A. If the search has not expired.

- B. If the search is currently running.

- C. If the search has been queued.

- D. If the search has expired.

Answer:

A

Reference:

https://docs.splunk.com/Documentation/Splunk/8.1.0/Search/

ViewsearchjobpropertieswiththeJobInspector